Welcome Back to Heron Watch 🕵️♂️🐦#

In the last post, I introduced my slightly ridiculous but fully serious attempt to defend my garden pond from a feathered fish thief. Long story short: AI detected gray heron + sprinkler system = nobody touches the koi.

👈 Previously in the Heron Saga…

But this time, we’re going under the hood.

This blog entry is all about the architecture—the system of microservices that work together behind the scenes to catch the heron in action. It’s over-engineered in the most delightful way and also happens to be a great demonstration of distributed system design in a real-world (well, backyard) use case.

Let’s break it down.

🧩 The Big Picture#

Here’s the full high-level architecture:

(Home Assistant)"] %% ─────────────────────────────────────────── %% 4. Inference Orchestrator -->|POST image| Inference Inference["inference-svc"] Inference -->|JSON results| Orchestrator Orchestrator -->|publishes detections| detections Orchestrator -->|publishes predicted JPEGs| predicted_frames %% ─────────────────────────────────────────── %% 5. Clip extraction ClipExtractor["clip-extractor-svc"] detections --> ClipExtractor %% ─────────────────────────────────────────── %% 6. Clip buffer & storage ClipBuffer["clip-buffer-svc"] ClipBuffer -->|maintains ring| Buffer subgraph Storage["Storage"] direction TB Buffer["/buffer (ring segments)"] Clips["/clips (saved MP4s)"] end Buffer --> ClipExtractor ClipExtractor -->|writes clips| Clips Clips --> Dashboard %% ─────────────────────────────────────────── %% 7. Dashboard & user subgraph DashboardGrp["Dashboard"] direction TB predicted_frames --> Dashboard Dashboard["dashboard-svc"] Dashboard -->|serves live + clips| User User["User / Browser"] end

This may look like a wall of arrows, but fear not. We’re going to zoom into each part and explore the services that make this backyard watchdog magic happen.

📨 Why RabbitMQ?#

Before we dive into the service specifics, let’s talk message brokers. In a microservices setup, different components need to communicate efficiently without yelling across the room. That’s where RabbitMQ hops in (pun 100% intended).

RabbitMQ is a lightweight, battle-tested message broker that allows asynchronous communication between services. One service can publish a message (e.g., a new frame), and another can pick it up and do something useful (e.g., run inference, store it, ignore it).

In this project, RabbitMQ is the glue between:

- The camera frame grabber

- The AI inference engine

- The detection pipeline

- The clip extraction logic

- The dashboard UI

- And anything else that needs event-based comms

RabbitMQ supports features like persistent queues, routing keys, and fanout exchanges, which make it ideal for this kind of loosely coupled, event-driven architecture.

🛠️ Could I have used MQTT instead? Yes, totally. In fact, for smaller projects or pure smart home use, MQTT alone could do the job — especially with things like MQTT Image. But I saw this as a great opportunity to showcase RabbitMQ as a proper message broker with queues and fanout and not just a pub/sub pipe.

🚀 What about Kafka + MinIO? If this were a large-scale surveillance system with thousands of cameras and multi-TB video buffers, I’d reach for Apache Kafka and store the footage on MinIO or S3. But this is a backyard project with exactly one IP cam and one bird.

📸 frame-capture‑svc: The Watchful Eye#

This service does one job — and does it with laser focus (or… lens focus?).

It connects to an RTSP-enabled IP camera, pulls JPEG snapshots at a configured

FPS, and tosses them into the raw_frames RabbitMQ queue for the rest of the

pipeline to handle.

Think of it as the watchtower of the system — always vigilant, always streaming.

Here’s how it’s configured in the docker-compose.yml:

frame-capture-svc:

build: ./frame-capture-svc

environment:

- RTSP_URL=rtsp://user:password@ip_address:554/live/ch1

- RABBIT_HOST=rabbitmq

- FPS=2

volumes:

- ./frame-capture-svc/frames:/app/frames

depends_on:

- rabbitmq

🔍 A few details#

RTSP_URLpoints to the IP camera stream.RABBIT_HOSTtells it where RabbitMQ lives.FPS=2means we’re capturing two frames per second — plenty for our birdy burglar.- The volume mount is just for debugging — you can see what frames it actually captured.

🧠 inference‑svc: The Brain#

Once a frame is captured, the orchestrator-svc (we’ll get to that) sends it over to inference-svc, which wraps around a YOLOv8 ONNX model. This is where object detection happens.

The service returns a clean JSON with detected objects, classes, bounding boxes, and confidence scores. It’s build using FastAPI + Uvicorn + ONNX for a snappy performance.

inference-svc:

build: ./inference-svc

volumes:

- ./inference-svc/model:/app/model

environment:

- RABBIT_HOST=rabbitmq

- MODEL_PATH=/app/model/best.onnx

- CONF_THRESHOLD=0.6

depends_on:

- rabbitmq

🔍 A few details#

MODEL_PATHpoints to the ONNX model file.CONF_THRESHOLDis the confidence threshold for detections (0.6 means 60% confidence).RABBIT_HOSTof course we need that RabbitMQ host again.- The volume mount is where the model file lives.

- The model is loaded once and reused for each request, making it efficient.

Why a separate service? Because:

- We want to scale inference independently (add more instances if needed)

- It keeps the model logic isolated and testable

- It enables us to swap models easily (e.g., YOLOv4, YOLOv7, YOLOv9, etc.)

🕹 orchestrator‑svc: The Puppet Master#

The heart of the system.

It orchestrates everything by:

- Consuming frames from the

raw_framesqueue - Sending the frames to the

inference-svcfor detection - Publishing the predicted frames to the

predicted_framesqueue - Checking the detection results and if a class of interest is detected:

- Publishing the detection results to the

detectionsqueue

- Publishing the detection results to the

- Sending an MQTT trigger to Home Assistant (or any other system)

Think of it as the conductor in our bird-detecting orchestra.

orchestrator-svc:

build: ./orchestrator-svc

environment:

- RABBIT_HOST=rabbitmq

- INFERENCE_URL=http://inference-svc:8000/detect

- DETECTION_CLASSES=heron # or list: heron,person,dog

- MQTT_HOST=homeassistant.fritz.box

- MQTT_PORT=1883

- MQTT_USERNAME=admin

- MQTT_PASSWORD=redacted

depends_on:

- rabbitmq

🔍 A few details#

RABBIT_HOSTis the RabbitMQ broker.INFERENCE_URLis the URL of the inference service.DETECTION_CLASSESis a comma-separated list of classes to detect (e.g.,heron,person,dog).MQTT_HOSTis the MQTT broker (Home Assistant in my case).MQTT_PORT,MQTT_USERNAME, andMQTT_PASSWORDare for connecting to the MQTT broker.- The

depends_onensures RabbitMQ is up before starting the orchestrator. - The orchestrator is the only service that needs to know about the MQTT broker, so it’s the only one that has those credentials.

🌀 clip-buffer‑svc: The Ringmaster#

This service is continuously recording small segments (like 5-second HLS chunks) and keeping them in a ring buffer stored in /buffer.

The idea is: even before a heron is detected, the footage is already waiting in a rolling loop. This way, we don’t miss the “approach” part of the action.

Ring buffer + fast access = juicy pre-roll clips.

clip-buffer-svc:

build: ./clip-buffer-svc

environment:

- RTSP_URL=rtsp://user:password@ip_address:554/live/ch1

volumes:

- buffer:/buffer

depends_on:

- rabbitmq

🔍 A few details#

Not much to say here:

RTSP_URLis the same as before./bufferis a Docker volume that stores the ring segments.- It’s built using FFmpeg to handle the video stream.

- Each segment is named after the timestamp it was recorded, so we can easily find the right one later.

✂️ clip-extractor‑svc: The Editor#

When the orchestrator detects a heron, it publishes a message to the detections queue.

The clip-extractor-svc consumes messages form this queue and fetches the relevant segments from the ring buffer.

It has a 10 second pre-roll and a 10 second post-roll, so it captures the whole action.

It waits after the detection to ensure the heron is gone before stopping the recording.

It stitches them together into a single MP4 file using FFmpeg and saves it in /clips.

After the clip is saved, it generates a thumbnail for the clip for the dashboard.

clip-extractor-svc:

build: ./clip-extractor-svc

environment:

- RABBIT_HOST=rabbitmq

- BUFFER_DIR=/buffer

- OUTPUT_DIR=/clips

volumes:

- buffer:/buffer

- clips:/clips

depends_on:

- rabbitmq

- clip-buffer-svc

🔍 A few details#

RABBIT_HOSTis the RabbitMQ broker.BUFFER_DIRis the path to the ring buffer inside the container.OUTPUT_DIRis the path to save the clips inside the container.- The

volumesmount the buffer and clips directories. - The

depends_onensures the clip buffer is up before starting the clip extractor.

Yes, the heron now stars in its own surveillance reels.

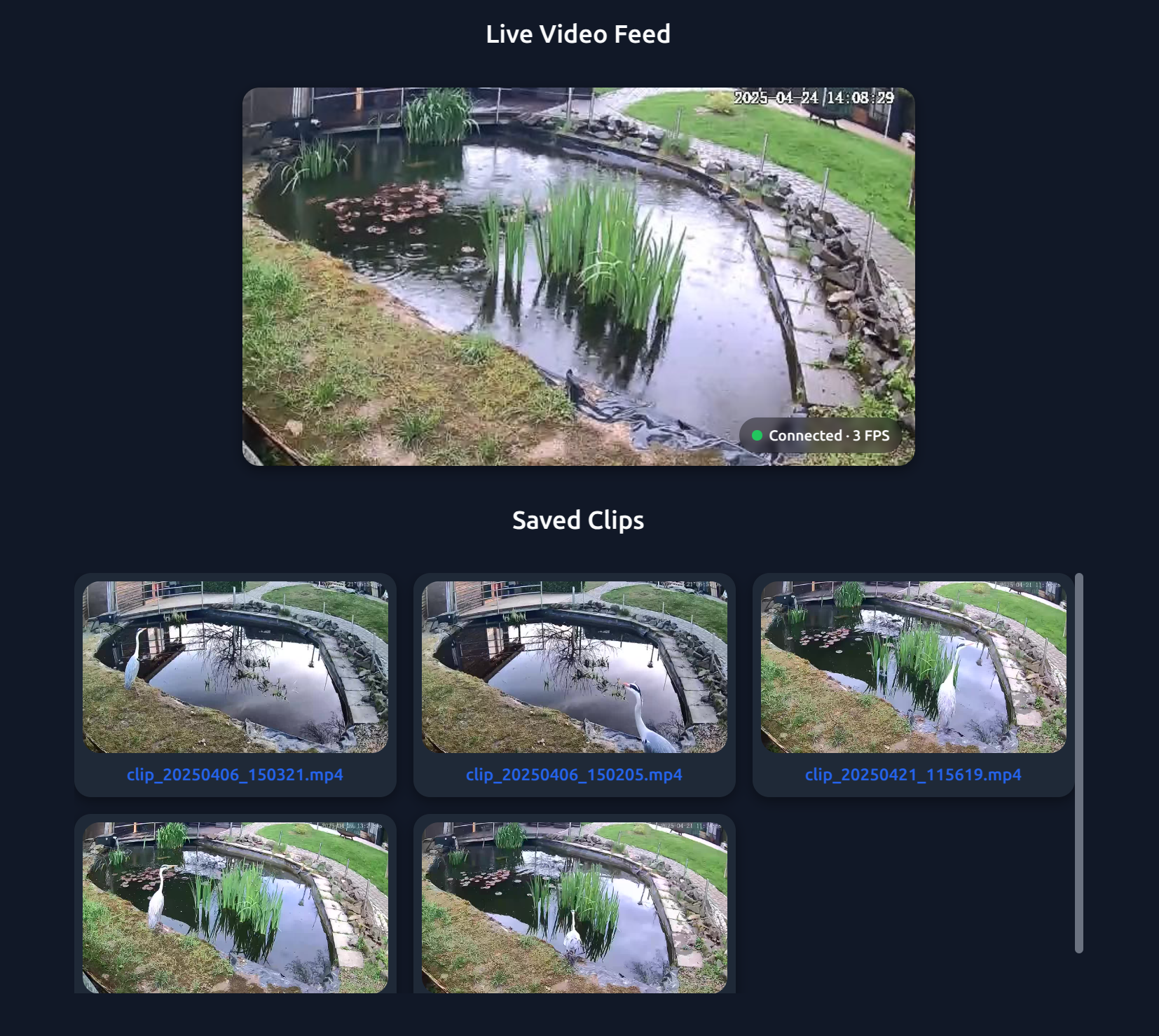

📊 dashboard‑svc: The Control Center#

A Flask + Socket.IO dashboard that:

- Streams live frames

- Shows the latest detections

- Provides access to saved clips

- Displays logs and status of services

Perfect for watching the heron get mildly inconvenienced in real-time.

dashboard-svc:

build: ./dashboard-svc

ports:

- "5000:5000"

environment:

- RABBIT_HOST=rabbitmq

- CLIPS_DIR=/clips

volumes:

- clips:/clips

depends_on:

- rabbitmq

🔍 A few details#

RABBIT_HOSTis of course again the RabbitMQ broker.CLIPS_DIRis the path where to find the saved clips inside the container.- The

volumesmount the clips directory. - The

portsexpose the dashboard on port 5000. - The

depends_onensures RabbitMQ is up before starting the dashboard.

🧩 System Design Insights#

Wondering about the setup? Each service runs in its own Docker container, defined in a docker-compose.yml. They talk to each other via RabbitMQ and are designed to be modular—swap in a new model, add more cameras, or update a service without touching the rest.

Environment variables keep everything configurable without changing code—via a .env file or directly in docker-compose.

During development, you don’t need containers at all. Just run services locally with defaults. Only the RTSP stream needs a real or virtual camera, but I’m working on a mocked RTSP setup using a sample video and two lightweight containers.

🧑💻 User Experience#

All this finally reaches the user—me—via a web dashboard. From here, I can review detections, debug the system, or just enjoy the drama of wildlife surveillance with a fresh coffee.

🧠 Why Microservices?#

You might ask, “Why not just write a monolith?”

Great question. Here’s why I went full microservice mode:

- Modularity: Each service is simple and focused

- Scalability: Run inference-svc on a beefier machine if needed

- Fault Isolation: One crash doesn’t take the whole system down

- Asynchronicity: RabbitMQ helps decouple everything cleanly

Also… because it’s fun.

Stay tuned for future posts in this series:

- Annotating with style (and speed)

- Live deployment tips

- Dashboard UI & integration tricks

Until then: detect early, spray responsibly.

Fun fact: Herons hate sudden loud noises. Turns out they don’t YOLO—they flinch.